Video Generation

- Tech Stack: PyTorch, Diffusion Models, DDIM

- Github URL: Project Link

- Publication URL: Research Paper Link

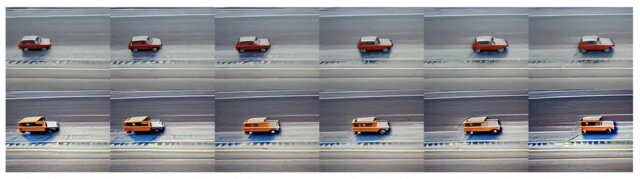

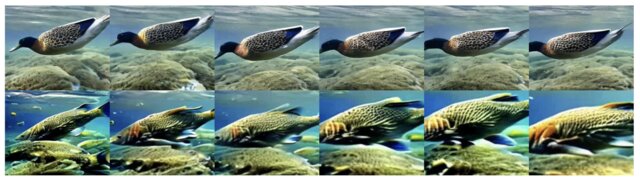

Developed a text to video generation tool using advanced stable diffusion models, focusing on customization of path, object, and motion dynamics in a video. This project utilized advanced dataset generated from models such as MotionDirector, Trailblazer, Tune-A-Video, and Text2LIVE to integrate textual prompts and navigational paths into dynamic video content. By leveraging stable diffusion techniques, we were able to achieve high-quality video generation with enhanced control over motion and object dynamics.

Our system allows for the precise customization of videos using simple text prompts, enabling the creation of contextually relevant and engaging content. The results demonstrated significant advancements in video synthesis, offering scalable solutions that could transform video generation practices across multiple applications, including virtual reality, education, and entertainment.

A red car moving on the road from left to right -> A yellow jeep moving on the road from left to right

A duck diving underwater from right to left -> A fish diving underwater from right to left with a zoom in

A rabbit walking on ground from top left to bottom right -> A dog walking on ground from top left to bottom right

A horse galloping on ground from right to left -> A tiger galloping on ground from right to left

A fish swimming in the ocean from right to left -> A tortoise swimming in the ocean from right to left